Who are you? Where do you work?

Hi! I’m Jessica Powell, the Co-Founder of AudioShake. We are a start-up that separates the full mix of a song into its instrumental and stems. This allows artists and labels to open up their songs to more uses in sync licensing, remixes, remastering, spatial mixes, and more. I’m based in San Francisco, California.

What are you currently listening to?

Fuerza Regida, Juana Molina, Terrell Hines… Also, whatever my coworkers are posting to the music channel on a given day in Slack.

Give us a small insight into your daily routine…

Start-ups are pretty wild and no day is the same. It can change depending on the day. I might be doing some combination of customer calls, managing payroll and bills, recruiting, working on a PRD or some other product management work. I also work on our website and take meetings with the research or engineering teams.

Over to you, Jessica…

Why the Future of Audio Depends on the Parts of Your Song:

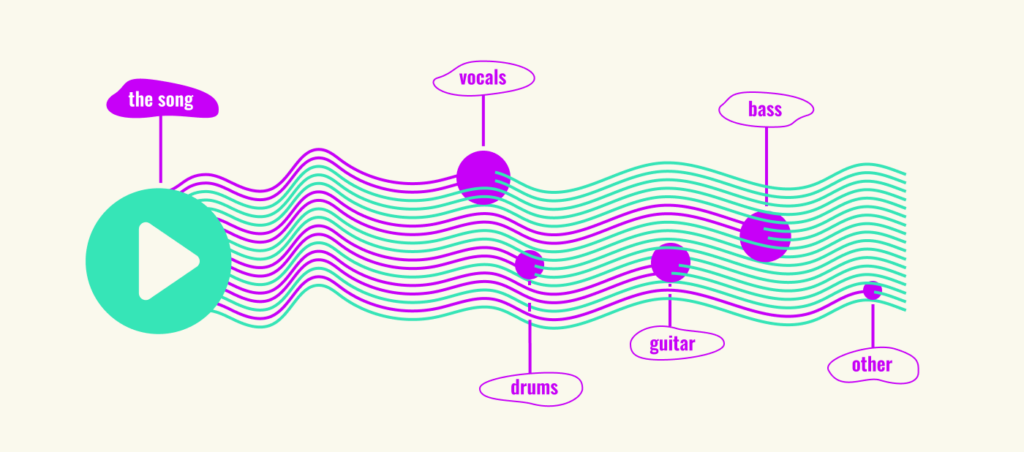

AudioShake sits at the intersection of music and tech. So, how much of our future music experiences will be built on the parts of a song (called “stems”), is one of the things we can see pretty clearly. Music–and content more generally–will be “atomized,” creating entirely new ways for fans to interact with music and video.

Today, the most common use of instrumentals and stems (e.g. the vocal or drum stem) is in sync licensing, remixes, and remasters. Those will continue to be important revenue and creative opportunities for artists and labels going forward.

But we are also moving into a world where music will be all around us. This means not just in the ways we know today (e.g. sitting in our car or bedroom, listening to a track). It would also be via new formats and experiences that are beginning to emerge. Pulling apart and remixing or re-arranging how, where, and when a song is heard, will be the basis for these new formats.

For example, on social media, we already see artists releasing stems or audio that allows fans to interact and play with the song at a deeper level. This will only grow as social media platforms build tools to make it even easier for fans to remix and re-imagine songs–without needing to know how to use professional digital workstations.

In gaming and fitness, we’ll see the emergence of adaptive music–music that is changing in relation to your environment and isn’t hard-coded into the game or platform. So your avatar could enter a scene, and each time the music might be different. Not only that, but the entire environment might have elements of that song.

In music education, fans will be able to play along with their favourite original songs–being able to isolate or mute e.g. the guitar or bass. In VR and metaverse-type worlds, the audio will be separated into stems and then spatialized, to create a more immersive experience. And already, people are working on things like stem NFTs.

Of course, there are still a lot of challenges for all of this to work for artists.

For one, a ton of songs are missing their stems. Sometimes because of when the song was recorded, or simply because a hard drive crashed and session files were lost. In those cases, the music is largely limited to what can be done with the full mix. It’s particularly problematic in sync. In that area, departments have told us they miss 30-50% of the opportunities that come to them. This is because they can’t provide an instrumental in time–if at all. That’s the piece AudioShake is hoping to help solve. We have an Enterprise platform for labels and publishers, and a platform for indie artists. With that, they can create AI instrumentals and stems on-demand, and not miss out on any opportunities.

Second, if we are going to move into a world where remixing, mash-ups, and algorithm-driven, interactive music experiences are the norm, there will need to be the right infrastructure in place for artists to be paid. In addition to that, we need for stem use to be detected across the ecosystem. Remixes can be great marketing and promotional tools, but artists aren’t always paid for them. Remixers don’t see as much upside either. There is definitely a lot of room for improvement.

With that said, I think it’s exciting that there will be more opportunities for music and artists in the future. By opening recordings up into their parts and instrumentals, an artist’s music has more opportunities to be listened to and enjoyed in new ways.

Where should readers go to find out more? Is there any further reading or digital gurus to recommend?

For general info about AudioShake, our website has lots of case studies and demos: audioshake.ai

For indie artists who would like to create stems for their work, they can go to AudioShake Indie: indie.audioshake.ai